Artificial Neural Network in Machine Learning

Artificial Neural Network is a type of neural network that seeks to emulate the network of neurons that forms up a human nervous system so that machines can comprehend stuff and make judgments in a sentient way. Machines are programmed to function essentially like linked neurons in order to create an artificial neural network. Let’s learn more about Artificial Neural Network in Machine Learning.

What is Artificial Neural Network in Machine Learning?

ANNs are nonlinear statistical models that exhibit a complicated interaction between inputs and outputs in order to uncover a new pattern. These artificial neural networks are used for a range of tasks including image identification, natural language processing, translation software, and clinical diagnosis.

One significant benefit of ANN is that it adapts from sample data sets. The most typical use of ANN is random function approximation. With such technologies, one may arrive at answers that specify the dispersion in a cost-effective manner. ANN may also deliver output results based on sample data rather than the complete dataset. Because of their strong prediction capabilities, ANNs may be used to improve current data analysis approaches.

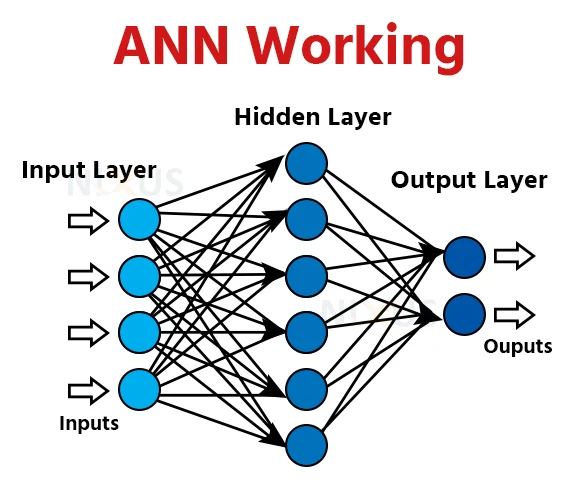

Artificial Neural Network Architecture:

A neural network comprises multiple levels, and each layer performs a specialized job. As the network’s sophistication develops, so does the amount of hidden layers, which is why it is characterized as a multi-layer perceptron.

A neural network in its truest state includes three layers: the input layer, the hidden layer, and the output layer. The input layer receives data input and passes them to the next level, and eventually, the output layer provides the final result, and these neural networks, like machine learning models, must be trained with some training data before offering a specific issue solution.

ANN Working:

The data is acquired in arithmetic data by the input source. The data provides an activation level, with a value assigned to each component. The higher the value, the higher the level of activity. The activation value is sent to the next node based on the values and activation algorithm. Each unit computes the weighted sum and modifies it in accordance with the transfer function (activation function). Following that, it performs an activation function. This function is exclusive to this neuron. Based on this, the neuron determines whether or not to send the signal. The signal extension is determined by ANN based on weight modifications.

The activation traverses the system until it hits the output node. The data is communicated in an understandable manner via the output layer. The network makes use of the output and desired outputs using the cost function. The cost function represents the gap in the real and expected values. The smaller the cost function, the nearer to the preferred result.

Input layer:

As the term indicates, it receives inputs in a variety of forms specified by the developer.

Hidden layer:

The hidden level appears below the input and output layers. It does most of the math to uncover hidden characteristics and connections.

Output layer:

The input is transformed using the hidden level, resulting in output that is transmitted using the same layer.

The artificial neural network receives input and calculates the weighted total of the inputs, as well as a bias. A transfer function is used to express this calculation.

The collection of transfer functions employed to obtain the target result is referred to as the activation function. There are several types of activation functions:

1. Binary:

The output of a binary activation function is either a one or a zero. A threshold value has been set up to do this. If the total weighed inputs of neurons is greater greater than 1, the activation function’s ultimate output is just one; otherwise, the value is 0.

2. Sigmoidal hyperbolic:

Sigmoidal Hyperbolic: The Sigmoidal Hyperbola function is sometimes referred as the “S” curve. To estimate result from the real net input, the tan hyperbolic function is utilised. The function is described as follows:

F(x) = (1/1 + exp(-$x))

The steepness parameter is denoted by $.

Back-propagation:

We present instances of input-output mappings to a neural network in an attempt to train it. Finally, when the neural network has completed its training, we put it to the test without providing it with these mappings. The neural network guesses the result, and we use several error functions to assess how accurate the output is. Lastly, the model modifies the weights of the neural networks depending on the results to optimise the network using gradient descent and the chain rule.

ANN types

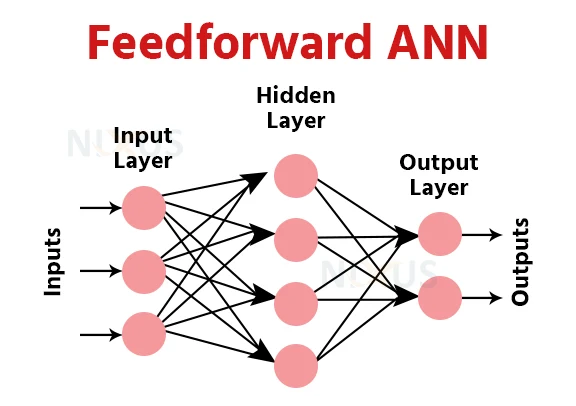

1. Feedforward ANN:

This network has three layers: input, hidden, and output. Signals can only go in one way. The input data is sent to the hidden level, which performs the arithmetic computations. The weighted sum of the operating element’s inputs is used to calculate. The preceding layer’s output would become the input for the next layer. This process is repeated through all levels and decides the outcome.

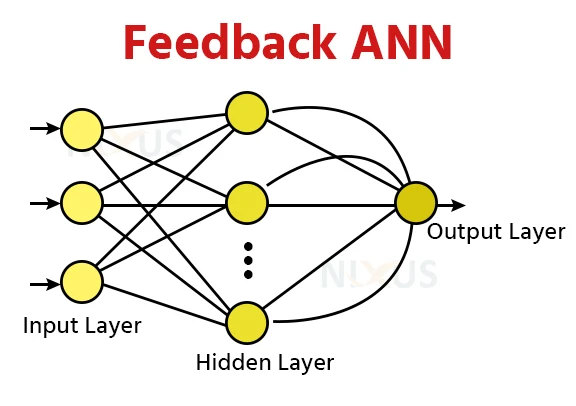

There are feedback routes in this network. Using loops, signals may go in both directions. Neurons can form any number of connections. Because of the loops, it becomes a dynamic system that changes continually in order to achieve equilibrium.

2. Feedback ANN:

There are feedback routes in this network. Using looping, information may go in both directions. Neurons can form any number of interconnections. Because of the looping, it is a dynamic network that evolves continually in order to achieve balance.

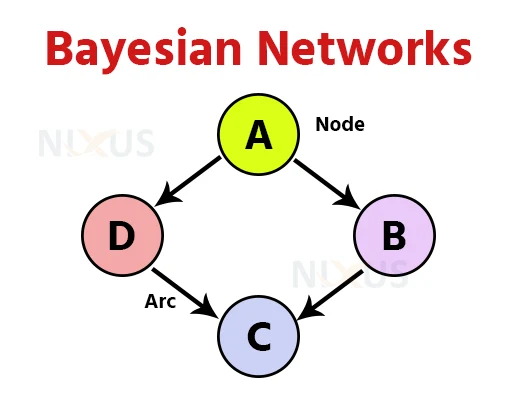

3. Bayesian networks:

These neural networks have such a stochastic modeling method that computes the chance using Bayesian Inference. Belief Networks are another name for this sort of Bayesian Network. There are lines that link the vertices in these Bayesian Networks, showing the probabilistic relationships existent among various types of unknown parameters.

If one node affects another, they will be along the same line of impact. The intensity of the association is quantified by the probability associated with each node. With the use of numerous elements, one may deduce from the random variables in the network based on the connection.

What method is used to re-calibrate the weights?

Weight recalibration is a simple yet time-consuming operation. The output nodes are the only ones that have a margin of error. The error rate on output nodes affects the re-calibration of weights on the connection between the hidden node and the output node. However, how can we determine the failure rate at the concealed nodes? Theoretically, it is possible to demonstrate:

Relationship between Biological neural network and artificial neural network:

The human brain has around 100bn cells. Each cell has a connection point that is between 1,000 and 100,000. Material is kept in the human mind in a way that it may be spread, and we can retrieve over one item of this information from our memory at one time if needed. We can argue that the human brain is composed of highly complex structures.

Take the instance of digital circuits that receives an input and outputs an output to better comprehend the artificial neural network. The “OR” gate accepts two inputs. If one or both of the inputs are “On,” the output will also be “On.” If both inputs are “Off,” the output will also be “Off.” In this case, the output is determined by the input. Our brains do not accomplish the same function. Because of the neurons in our brain that are “learning,” the outputs to inputs connections are always changing.

ANN applications:

1. Speech recognition:

Speech identification relies heavily on artificial neural networks (ANNs). Earlier speech recognition techniques used statistical models such as Hidden Markov Models. With the introduction of deep learning, several forms of neural networks have become the only way to acquire a precise categorization.

2. Sign classification:

We employ ANNs to recognize signatures and categorize them according to the person’s category while developing this strong authentication. Moreover, neural networks can determine whether or not a signature is genuine.

3. Handwriting recognition:

ANNs are employed to recognize handwritten characters. Handwritten symbols, which might be in the type of writing or numerals, are recognized by neural networks.

4. Facial recognition:

We employ neural networks to detect faces depending on the user’s identification. These are typically utilised in situations where safety users have clearance. The most common form of ANN utilised in this sector are CNNs

ANN advantages:

1. Parallelization: It is capable of parallelization. It possesses the computational capacity to carry out several tasks simultaneously.

2. Fault tolerance: The loss of one network component has no effect on the overall operation of a system. This property makes it fault-tolerant.

3. Training time: Because a neural network develops from experiences, it doesn’t require retraining.

4. Memory distribution: It is critical to identify the examples and to stimulate the networks as per the expected result by displaying these cases to the networks for ANN to be ready to adjust. The network’s succession is directly related to the selected examples, and if the occurrence cannot be seen by the networks in all its features, it will generate erroneous results.

ANN disadvantages:

1. Confidence: The most significant downside of ANN is its black-box design. The neural network does not provide an adequate reason for deciding the output. It undermines network confidence.

2. Development time: The network’s developmental time frame is undetermined.

3. Architecture: There is no guarantee that the network architecture is correct. There is no specific rule for determining the architecture.

4. Hardware dependency: ANNs, due to their architecture, require computers with parallel processing capacity. As a result, they are dependent on equipment

5. Performance: Even with insufficient data, after ANN training, the information may create output. The relevance of incomplete information is what causes the performance degradation here.

Conclusion

ANNs are basic mathematical structures that are used to improve conventional data processing technology. Though it cannot compete with the capability of the human mind, it is the cornerstone of deep learning.

We hope this article gave you an overview of the Artificial Neural Network (ANN) framework. Even though it hasn’t gone into detail about the derivation or the implementation, the material in this post should be enough for you to grasp and implement the method.