Create a Chatbot using Python & NLTK

With this Machine Learning Project, we will be building an AI-based chatbot. A chatbot is an AI system that gives answers to queries of the user. A chatbot is used by a lot of big businesses to autoreply and solves the issues of the customer. A chatbot needs to be pre-trained before giving the result of the user’s query.

So, let’s start with the project.

ChatBot

Conversational agents or chatbots are computer programs that generate responses based on input to emulate human conversations. Simulating human-human interactions is the goal of these applications.

The majority of chatbots are used in businesses and corporate organizations, including government, non-profit, and private organizations. In addition to providing customer service, product suggestions, and product inquiries, they can also serve as personal assistants.

Rule-based chat agents, retrieval techniques, and simple machine learning algorithms are commonly used to build these chat agents. Using retrieval-based techniques, chat agents scan for keywords in the input phrase and retrieve relevant answers. The retrieved text is pulled from internal or external data sources, including the internet or an organization’s database, based on keyword similarities.

The use of natural language processing techniques and machine learning algorithms is also used for developing advanced chatbots. Additionally, many commercial chat engines allow for the creation of chatbots using client data.

The Model Architecture

An NLTK is an acronym for a natural language toolkit. One of the most powerful NLP libraries, this toolkit provides packages to make machines understand human language and respond appropriately to it. In addition to summarizing, translating, recognizing named entities, extracting relationships, and analyzing sentiment, NLTK performs several other tasks. The preprocessed data is subjected to natural language processing concepts.

The semantics of human language can also be determined, acquired, and gauged by means of natural language processing. Natural language processing on cleaned data is accomplished using Python programming language. We use word tokenization, sentence tokenization, stop word removal, list stemming, entity recognition, and part of speech tagging to reconstruct the data into an interpretable pattern.

Word tokenization refers to converting text into tokens and storing them in the list. The strategy of sentence tokenization involves transforming the text into different phrases.

There are stop words that are deemed inapplicable or pointless since they require limited importance in capturing the semantics of the text. Additionally, stop words prolong the search time, resulting in wasted computational resources. Both time complexity and space complexity are preserved by omitting stop words.

Another crude method of stemming is to cut off the ends or beginnings of words. Its first aim is to reduce a derivative word to its standard form and keep the idea behind it. A Parts of Speech Tagger examines the text and assigns a part of speech to each token, such as the verb, adjective, or noun. An Entity Identification Tool helps to identify the named entities from the text, such as people, organizations, etc.

A definitive keyword was derived after implementing NLP techniques to process cleaned data. Keywords from the user dictionary were compared to these keywords. All keywords are contained in the vocabulary. The sentiment of the context is determined based on the keywords obtained from the text. Users are not allowed to send messages if their meaning is inappropriate.

Project Prerequisites

The required modules for this project are :

- NLTK- pip install nltk

- Numpy- pip install numpy

- String – pip install string

These are the 3 libraries, we are going to use in this project.

Python Chatbot Project

The dataset for this project is a text file. In the text, file some random text is written about the chatbot, and this text is taken from Wikipedia. If users ask anything about the chatbot then the chatbot is going to give the result from that text file. You can add more text to this text file. The more text you add, the more your chatbot can solve the users’ queries.

Please download chatbot python code and dataset from the following link: Python Chatbot Project

Steps to Implement Python Chatbot Project

1. Importing all the modules to be used in the project.

import nltk

nltk.download('wordnet')

nltk.download('omw-1.4')

import warnings

warnings.filterwarnings("ignore")

import numpy as np

import random

import string

2. Here we are reading our text file and we are storing sentences and words in different variables.

f=open('chatbot.txt','r',errors = 'ignore')

raw=f.read()

raw=raw.lower()

sent_tokens = nltk.sent_tokenize(raw)

word_tokens = nltk.word_tokenize(raw)

sent_tokens[:2]

word_tokens[:5]

sent_tokens[0]

word_tokens[:5]

lemmer = nltk.stem.WordNetLemmatizer()

3. Here we are defining the function of lemTokens and lemNormalize.

def LemTokens(tokens):

return [lemmer.lemmatize(token) for token in tokens]

remove_punct_dict = dict((ord(punct), None) for punct in string.punctuation)

def LemNormalize(text):

return LemTokens(nltk.word_tokenize(text.lower().translate(remove_punct_dict)))

4. Here we are defining the results of greetings input and responses to the greetings. Also, we are defining a function for greeting.

GREETING_INPUTS = ("hello", "hi", "greetings", "sup", "what's up","hey",)

GREETING_RESPONSES = ["hi", "hey", "*nods*", "hi there", "hello", "I am glad! You are talking to me"]

def greeting(sentence):

for word in sentence.split():

if word.lower() in GREETING_INPUTS:

return random.choice(GREETING_RESPONSES)

5. Here we are defining the model and defining a function for giving the result of a user’s query. The answers to the user’s query are there in the text file that we read in the starting. If users give a query that is outside the scope of the text file will not be resulted.

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

def response(user_response):

robo_response=''

sent_tokens.append(user_response)

TfidfVec = TfidfVectorizer(tokenizer=LemNormalize, stop_words='english')

tfidf = TfidfVec.fit_transform(sent_tokens)

vals = cosine_similarity(tfidf[-1], tfidf)

idx=vals.argsort()[0][-2]

flat = vals.flatten()

flat.sort()

req_tfidf = flat[-2]

if(req_tfidf==0):

robo_response=robo_response+"I am sorry! I don't understand you"

return robo_response

else:

robo_response = robo_response+sent_tokens[idx]

return robo_response

6. Here is our encoder function. It will take the tokenizer and the length of the text that we want to encode.

def encode_sequence(tokenizer,length,lines):

seq = tokenizer.texts_to_sequences(lines)

seq = pad_sequences(seq, maxlen = length, padding='post')

return seq

7. Here we are writing code for taking the query of the user. If the user’s query is “Bye”, the while loop will terminate until then while the loop will keep going on and the user can continue to ask queries from the chatbot.

flag=True

print("ROBO: My name is Chatty. I will answer your queries about Chatbots. If you want to exit, type Bye!")

while(flag==True):

user_response = input()

user_response=user_response.lower()

if(user_response!='bye'):

if(user_response=='thanks' or user_response=='thank you' ):

flag=False

print("ROBO: You are welcome..")

else:

if(greeting(user_response)!=None):

print("ROBO: "+greeting(user_response))

else:

print("ROBO: ",end="")

print(response(user_response))

sent_tokens.remove(user_response)

else:

flag=False

print("ROBO: Bye! take care..")

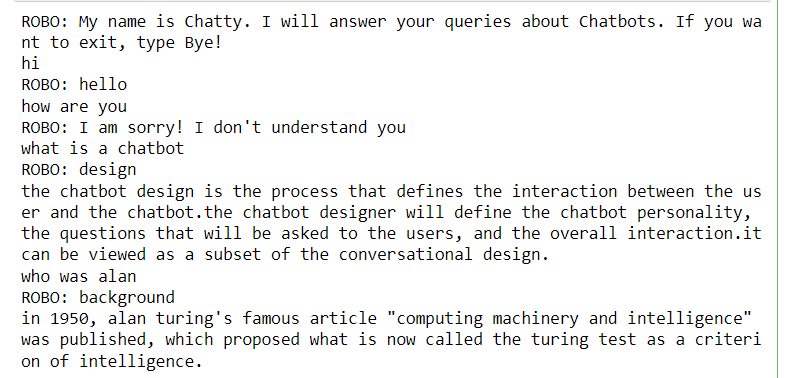

Python Chatbot Output

Summary

In this Deep Learning project, we learned how to build an AI Chatbot system. In this project, we have used cosine similarity to give results according to the user’s query. We have used tfidf as a vectorizer also. We hope, that you have learned something new.