Gender and Age Detection Machine Learning Project

With this Machine Learning Project, we will be building age and gender detection system. This project will take an image as input and will determine the age and gender of the person in the image.

So, let’s build this system.

Age and Gender System

A gender and age detection system is one that is used to detect the age and the gender of a user from a photo of the person. The algorithms used in these systems heavily depend on facial, hair, and light. This study will cover a number of important algorithms. Let’s first review those algorithms. In order to detect gender and age, it is important to detect a person’s face.

Various algorithms that can be used to accomplish this task include Haar Cascade, Deep Neural Networks DNN, Histograms of Oriented Gradients HoG, and Convolutional Neural Networks CNN, all of which have various advantages and disadvantages. One of the main algorithms for deep learning is the convolutional neural network (CNN), which learns directly from images. In our case, the algorithm will be very useful since it assumes that the gender and age range predictions are classification problems with Men and Women for gender and multiple classes for ages.

Gender Detection with CNN

Using OpenCV’s fisher faces implementation, it is quite popular to recognize gender. Using this example, I’ll take a different approach to gender recognition. This method was developed by Israeli researchers Gil Levi and Tal Hassner. This example uses CNNs trained by them. Using OpenCV’s dnn package, we will build deep neural networks.

With the DNN package, you can populate neural networks using the Net OpenCV class. As well as supporting models from TensorFlow, Caffe, and torch, the packages also support importing models from other deep learning frameworks. Researchers mentioned above have published Caffe models. The model will therefore be imported using the CaffeImporter.

Age Recognition with CNN

A prototxt file and a caffe model file correspond to this procedure except that the prototxt file is “deploy_agenet.prototxt” and the caffe model file is “age_net.caffemodel”. CNN output layer (probability layer) contains eight values for eight age classes (0–2″, 4–6, 8–13″, 15–20″, 25–32″, 38–43″, 48–53″ and 60-) A caffe model has two associated files,

- prototxt –The definition of CNN. Inputs, outputs, and functions of each layer in the neural network are defined in this file.

- There are two files in our model which contains information about the trained neural networks.

Architecture

For face detection, we have a .pb file; this holds the graph definition and the trained weights of the model. This file contains the following: opencv_face_detector.pbtxt opencv_face_detector_uint8.pb age_deploy.prototxt age_net.caffemodel and a few pictures to try the project on. Protobufs are stored in binary format in .pb files, while text files are stored in .pbtxt files. TensorFlow files are shown here.

- Age and gender configuration is described by the .prototxt file, and its internal states are described by the .caffemodel file.

- By using the argparse library, we can parse arguments from the command prompt and retrieve images. To classify gender and age, we parse the argument containing the path to the image.

- The protocol buffer and model should be initialized for face, age, and gender.

- Make a list of the age ranges and genders you’d like to classify and set the mean values for the model.

- Using the readNet() method, you can now load the networks. A trained weight is stored in the first parameter, and a network configuration is stored in the second.

- If you want to classify webcam streams, let’s capture the video stream. Padding should be set to 20 pixels.

- The content of the stream is read and stored into the names hasFrame and frame until a key is pressed. A video can’t be rendered on the fly, so we need to call cv2’s waitKey(), and then break.

- To retrieve the faceNet and frame parameters, call highlightFace(), and store the output under the names resultImg and faceBoxes. Faces are not detected if we receive 0 faceBoxes.

Project Prerequisites

The requirement for this project is Python 3.6 installed on your computer. I have used Jupyter notebook for this project. You can use whatever you want.

The required modules for this project are –

- OpenCV(4.6.0) – pip install cv2

That’s all we need for our project.

Gender and Age Detection Project

We will be using the pre-trained Caffe model in this project which was created by training on lots of images so that we could save our resources. Please download the dataset & the Gender and Age Detection Project code from the following link: Gender and Age Detection Project

Steps to Implement

1. Import the Modules and read the Caffe model and prototxt files. These files contain the pre-trained models with a lot of images so that we don’t have to do it here and we could save our time and resource.

import cv2 #importing the OpenCv library facP='opencv_face_detector.pbtxt' #loading the face detector facM='opencv_face_detector_uint8.pb' #loading the facedetector unit ageP='age_deploy.prototxt' #laoding the prototxt file ageM'age_net.caffemodel' #loading the caffemodel genderP='gender_deploy.prototxt' #loading the gender deploy library genderM='gender_net.caffemodel' #loading the gender net caffe model

2. Here we are defining a function that will detect the face in the video camera. It will be using the DNN and OpenCV for the camera. Then it will create a boundary around the face and will detect the face. Then it will use the Caffe model files to detect the gender and the age of the person.

def detectFace(netsss,f,ct=0.7): #creating the face detection function

framea=f.copy() #copying the current face passed

frameHeight=framea.shape[0] #taking the height of the frame

frameWidth=framea.shape[1] #taking the width of the frame

blob=cv2.dnn.blobFromImage(framea,1.0,(227,227),[124.96,115.97,106.13],swapRB=True,crop=False) #using DNN function of OpenCV

net.setInput(blob) #setInput function is called

detect=net.forward() #calling the forward function

fbox=[] #taking an array for faceboxes

for i in range(detections.shape[2]):

confidence=detections[0,0,i,2] #putting the values in confidence array

if confidence>ct: #checking if video has a person in it or not

x1=int(detections[0,0,i,3]*frameWidth) #taking the x1

y1=int(detections[0,0,i,4]*frameHeight) #taking the y1

x2=int(detections[0,0,i,5]*frameWidth) #taking the x2

y2=int(detections[0,0,i,6]*frameHeight) #taking the y2

fbox.append([x1,y1,x2,y2]) #putting all values in our detection matrix

gL=['Male','Female'] #making the list of gender

aL=['(0-2)','(4-6)','(8-12)','(15-20)','(25-32)','(38-43)','(48-53)','(60-100)'] #making the list of range of ages

fN=cv2.dnn.readNet(facM,facP) #loading the faceNet model

aN=cv2.dnn.readNet(ageM,ageP) #loading the agenet model

gN=cv2.dnn.readNet(genderM,genderP) #loading the gender model

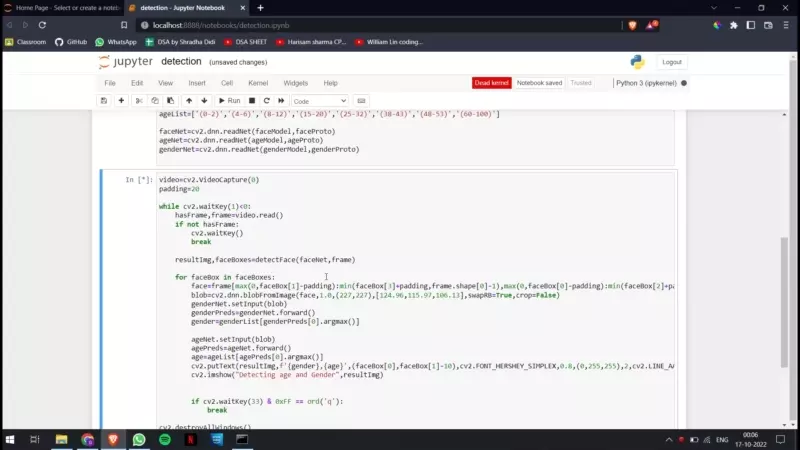

3. Here we are using the OpenCV to turn the video camera On and detect then we are using our function which we defined in our previous step to detect the age and the gender.

video=cv2.VideoCapture(0) #starting the video camera so that we can detect a face

padding=20 #we are keeping a padding of 20 because that would look optimal

while cv2.waitKey(1)<0: #starting while loop to run the video camera and trying to detect a face.

hasFrame,frame=video.read() #read function for reading video

if not hasFrame: #checking the video has a frame in it or not

cv2.waitKey() #wait key of OpenCV function, we are using a waitKey function of OpenCV

break #breaking the loop when no frame is found

resultImg,faceBoxes=detectFace(faceNet,frame) #calling the detectface function and passing the faceNet and frame object into the function

for faceBox in faceBoxes: #for multiple faces in the video we are using a for loop

face=frame[max(0,faceBox[1]-padding):min(faceBox[3]+padding,frame.shape[0]-1),max(0,faceBox[0]-padding):min(faceBox[2]+padding, frame.shape[1]-1)]

blob=cv2.dnn.blobFromImage(face,1.0,(227,227),[124.96,115.97,106.13],swapRB=True,crop=False)

genderNet.setInput(blob) #passing the blob into setInput

genderPreds=genderNet.forward() #calling the forward fucntion

gender=gL[genderPreds[0].argmax()] #loading the gender list

ageNet.setInput(blob) #passing the blob into setInputs

agePreds=ageNet.forward() #calling the forward function

age=aL[agePreds[0].argmax()] #loading the age list

cv2.putText(resultImg,f'{gender},{age}',(faceBox[0],faceBox[1]-10),cv2.FONT_HERSHEY_SIMPLEX,0.8,(0,255,255),2,cv2.LINE_AA) #marking the gender and age

cv2.imshow("Gender and Age",resultImg) #showing the image we are printing out the result here of the gender and the age.

if cv2.waitKey(33) & 0xFF == ord('q'): #break loop when closing or there is no frame left or we press the q button

break

cv2.destroyAllWindows() #close the running window of OpenCV

Gender and Age Detection Project Output

Summary

In this Machine Learning project, we built an age and gender system that will turn the video camera on using OpenCV, draw a boundary around the face, and detect the person’s age and gender. We hope you learn something new from this project.