SVM Kernel Functions

SVM techniques rely on a collection of mathematical functions known as the kernel. The kernel’s function is to receive data and change it into the desired form. Various sorts of kernel functions are useful for different SVM algorithms. These functions might be of several kinds. Examples include linear, nonlinear, polynomial, radial basis function (RBF), and sigmoid functions.

What is Kernel?

A kernel in SVM aids in issue solving. They offer shortcuts to bypass doing complicated computations. The great feature about a kernel is that it allows us extra dimensionality and ability to execute smooth computations. With kernels, we can go up to an unlimited number of dimensions.

Kernels are a method for solving non-linear issues using linear classifiers. This is the kernel trick approach. In the SVM codes, the kernel functions are arguments. These kernel functions also aid in the determination of decision limits for greater dimensions. The value may be of any kind, ranging from linear to polynomial.

Rules of SVM Kernel Functions

The kernel functions must adhere to a set of regulations.

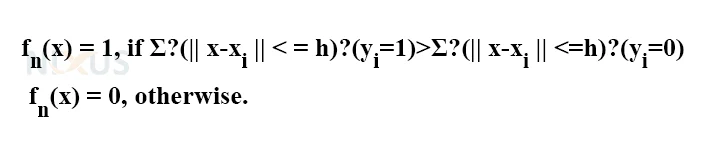

Furthermore, these criteria determine which kernel should be used for categorization. The moving window classifier, often known as the window function, is a similar method. This function is depicted as follows:

The summations in this case range from i=1 to n. The breadth of the screen is denoted by ‘h.’ This rule applies weights to locations that are a specified length away from ‘x.’

‘xi’ are the points that are close to ‘x’.

It’s critical that the weights are distributed in the direction of xi. This guarantees that the weight operations run smoothly. The kernel functions are these weight functions. The kernel function is denoted by the symbol K: Rd-> R.

Working of SVM Kernel Functions

Kernels are a method for solving non-linear issues using linear classifiers. This is referred to as the kernel trick approach. In the SVM codes, the kernel functions are employed as arguments. They aid in the formation of the hyperplane and decision boundary. In the SVM program, we may change the amount of the kernel parameter.

The kernel can be of any kind, from linear to polynomial. If the kernel value is linear, the decision boundary will be linear and two-dimensional. These kernel functions also aid in the determination of decision boundaries for greater magnitude.

Advantages of SVM Kernel Functions

We don’t need to perform any complicated computations. The kernel functions handle all of the heavy lifting. We only need to provide the input and select the proper kernel. We may also use the kernel functions to tackle the overfitting problem in SVM.

Disadvantages of Kernel Functions

Overfitting occurs when there are more feature sets in the data than sample sets. We may either increase the data or select the appropriate kernel to fix the problem.

There are kernels, like RBF, that operate well with lesser amounts of data. However, because RBF is a universal kernel, employing it on smaller datasets may increase the likelihood of overfitting.

Types of Kernel Functions:

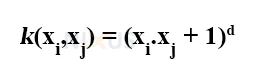

1. Polynomial Kernel Function

This is a generic form of kernels with degree greater than one degree. It can process, analyze and generate images.

This comes in two types:

- Homogeneous Polynomial Kernel Function

- Heterogeneous Polynomial Kernel Function

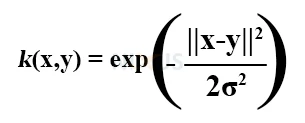

2. RBF Gaussian Kernel Function

The radial basis function is abbreviated as RBF. We use this when we don’t understand data in advance. It is:

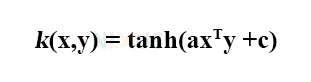

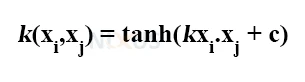

3. Sigmoid Kernel Function

This is primarily useful in applying neural network models. This may be shown as follows:

4. Hyperbolic Tangent Kernel Function

This also proves useful in the implementation of neural networks.

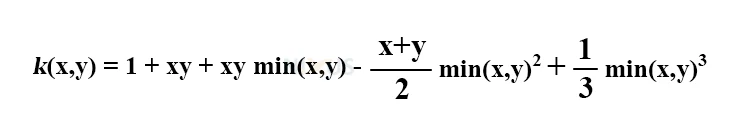

5. Linear splines kernel in one-dimension

It comes in handy when working with huge sparse data vectors. It’s especially useful in text classification and regression issues.

6. Graph Kernel Function

This kernel is huge in graph theory to compute the inner. It compares two graphs to find how similar they are. They contribute to fields such as genomics and chemo-bioinformatics, among others.

7. String Kernel Function

Strings serve as the foundation for this kernel’s operation. It is mostly applicable in fields such as text categorization. They are extremely valuable in text mining, genomic analysis, and other fields.

8. Tree Kernel Function

This kernel is much more closely related to the tree topology. The kernel assists in dividing the input into tree structure and assisting the SVM in distinguishing between them. This is useful in language categorization and is utilized in fields such as NLP.

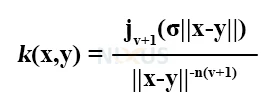

9. Bessel function of the first kind Kernel

It may eliminate the crossover element in mathematical equations. The equation is:

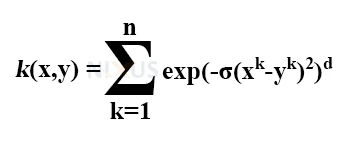

10. ANOVA radial basis kernel

It may be applied to regression tasks. The equation is:

Summary:

This article was all about the Kernel functions that we use in support vector machines. There are many more sorts of kernels, but we’ve discussed the types that are most useful and easier to understand.